Earkick Mental Health AI

Earkick's technology has proven efficacy and is taking a unique and novel approach that enables fine grained and quantifiable mental health monitoring and assessment to improve the user's mental health and quality of life.

To monitor mental health, we combine various sources of data, including insights from AI online chat, to better understand the context in which a user is situated and how it potentially influences their mental health.

The data that we're considering

for mental health prediction is:

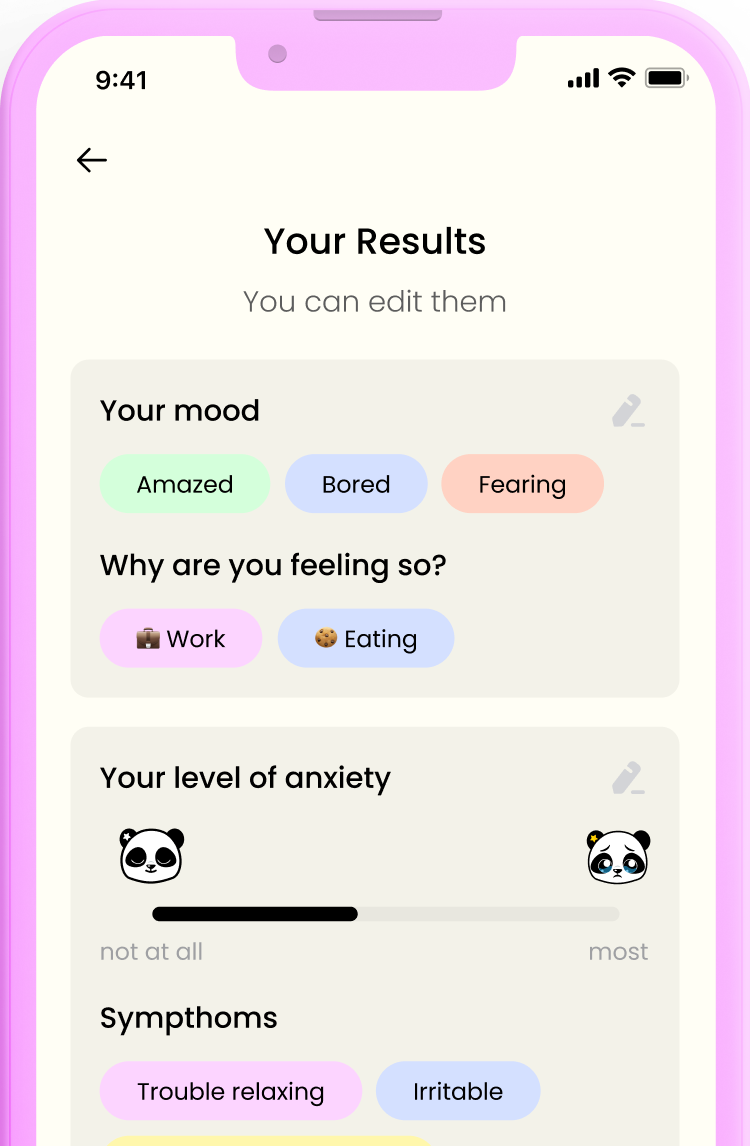

Audio, video and text memo's and typing behavior when users describe their day, their mood and anxiety levels.

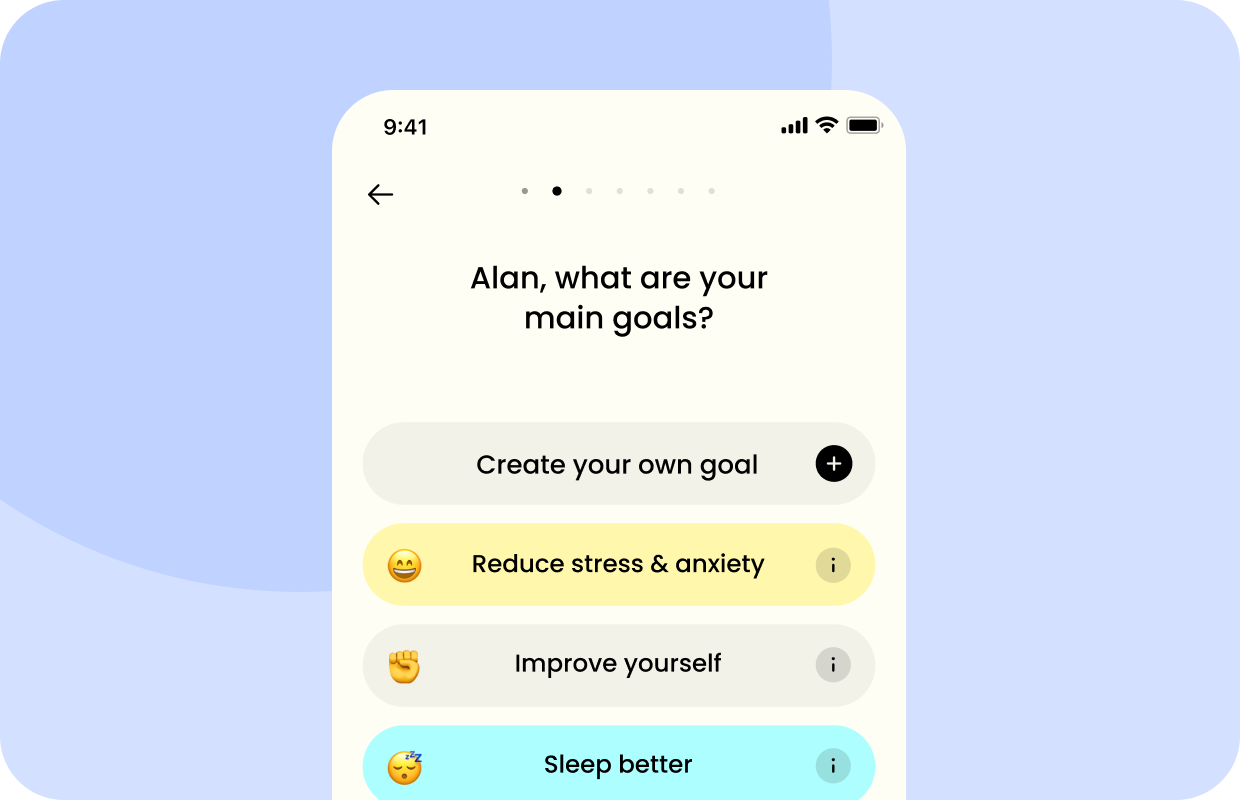

Tasks performed by the user which go toward achieving their goals (e.g. sleeping more than 7 hrs).

Sleep duration

Exercises

Meditations / Mindfulness minutes

Heart Rate measurements

Post-processed features such as voice pitch, jitter, speech rate, and in the visual domain, facial action units like the movement of eyebrows, eye gaze and eye blink rate

Context information such as work, friends, exercise that led to how users are feeling.

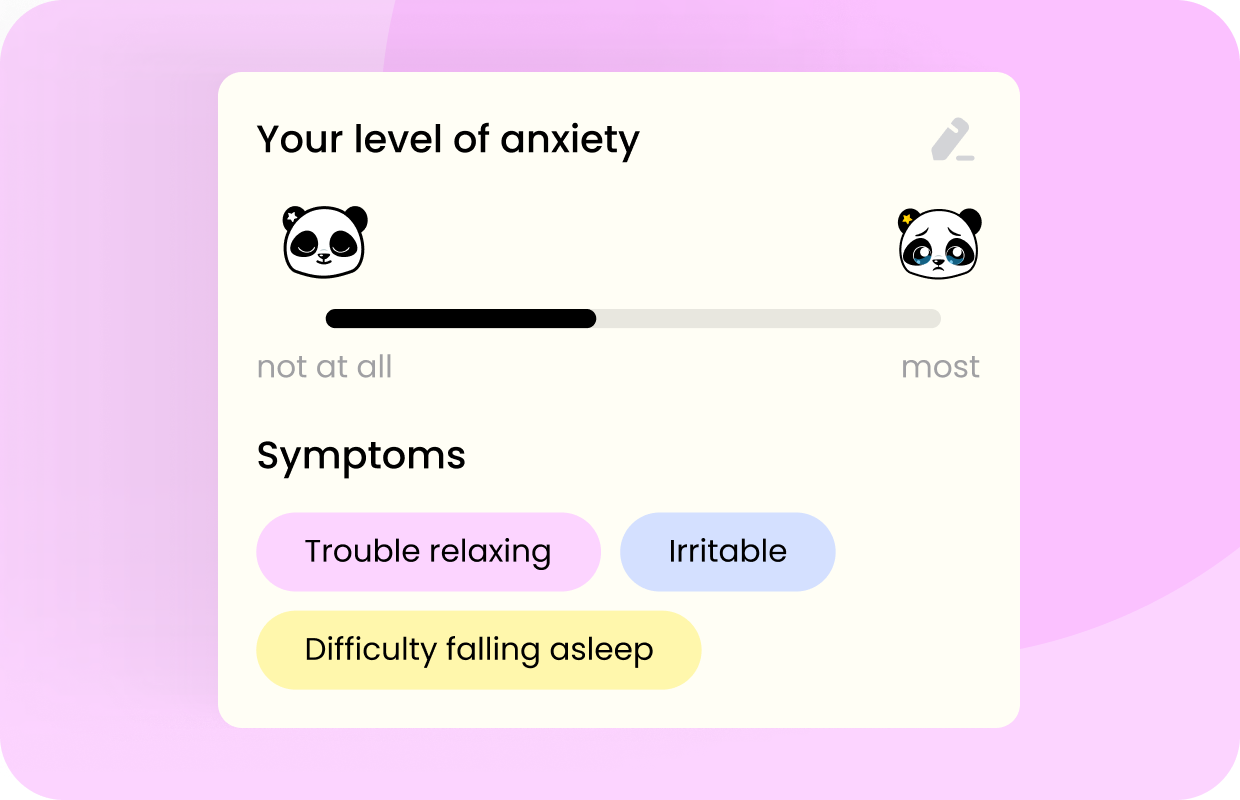

Symptoms that are of clinical use in diagnosis of mental health issues. For example a user may record a symptom like “Nervousness” or “Constantly worrying” which correspond to clinical assessment questions in forms like Generalized Anxiety Disorder 7.

Menstrual cycle

Location data, if the user permits us to use it.

Weather data such as sunlight hours and cloud coverage if the user permits us to use location

We extract features from timestamps of when people type.

Panic attack time, location, duration, intensity and symptoms

The novelty of our approach lies in:

01. Smart mental health assessment

The integration of numerous channels of information in an innovative way, where each channel provides a different perspective and additional value to solve the puzzle that is mental health assessment.

(Status: App based measurements completed).

02. Decode a user's emotional state

Use of latest machine learning models to decode a user’s emotional state, create features to predict outcomes such as anxiety levels, mood disorders, panic attacks and other irregularities in behavior.

(Status: First models are prepared).

03.Health exercises based on you

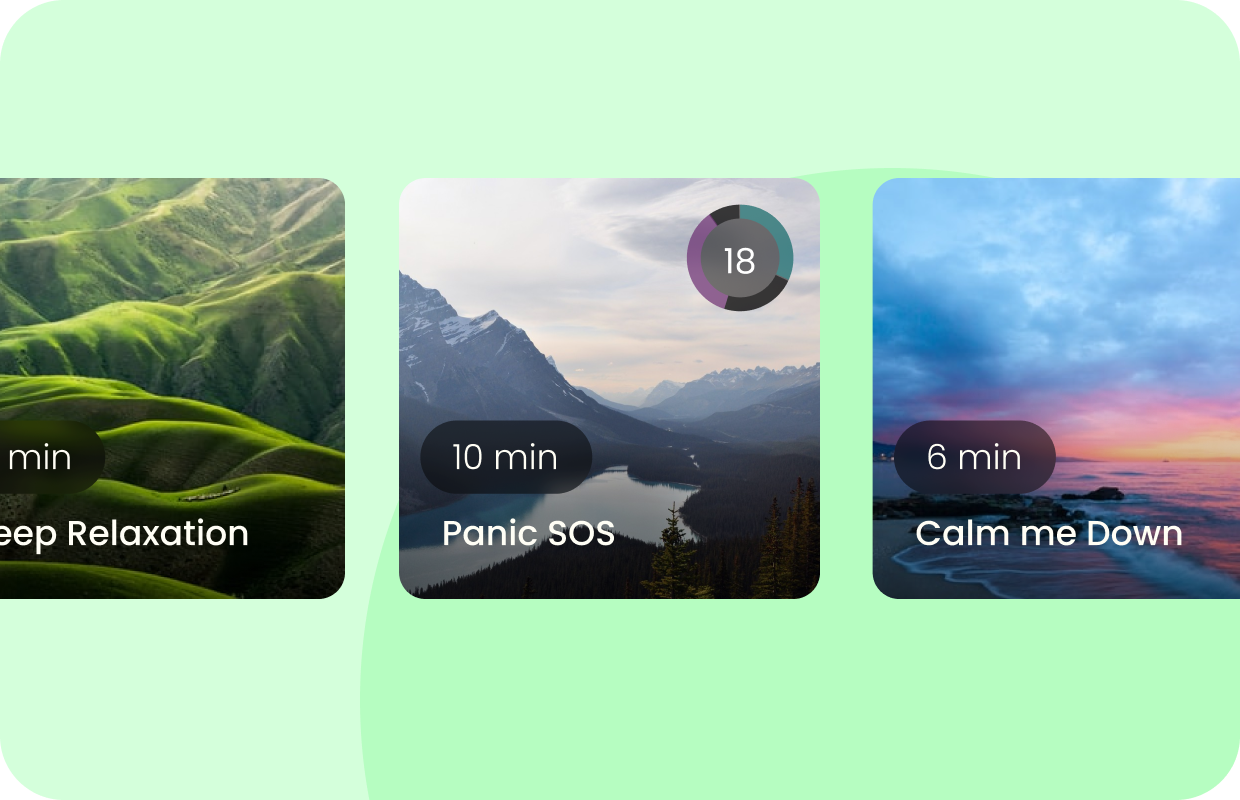

Recommending in-the-moment cognitive behavior therapy based mental health exercises informed by our emotion recognition and mood/anxiety prediction models.

(Status: upcoming feature).

04.Privatized learning

Built-in privatized learning that protects the users’ identity, because we do not collect email addresses or demographic data such as name, age, gender or location.

Research

[1] J. Palotti, G. Narula, L. Raheem, H. Bay, 'Analysis of Emotion Annotation Strength Improves Generalization in Speech Emotion Recognition Models'. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR2023W

[2] D. A. Kalmbach, J. R. Anderson, and C. L. Drake, 'The impact of stress on sleep: Pathogenic sleep reactivity as a vulnerability toinsomnia and circadian disorders', J. Sleep Res., vol. 27, no. 6, p. e12710, Dec. 2018, doi: 10.1111/jsr.12710.

[3] C. N. Kaufmann, R. Susukida, and C. A. Depp, 'Sleep apnea, psychopathology, and mental health care', Sleep Health, vol. 3, no. 4, pp. 244–249, Aug. 2017, doi: 10.1016/j.sleh.2017.04.003.

[4] J. C. Mundt, A. P. Vogel, D. E. Feltner, and W. R. Lenderking, 'Vocal acoustic biomarkers of depression severity and treatment response', Biol. Psychiatry, vol. 72, no. 7, pp. 580-587, Oct. 2012, doi: 10.1016/j.biopsych. 2012.03.015.

[5] L. Albuquerque, A. R. S. Valente, A. Teixeira, D. Figueiredo, P. Sa-Couto, and C. Oliveira, 'Association between acoustic speech features and non-severe levels of anxiety and depression symptoms across lifespan', PloS One, vol. 16, no. 4, p. e0248842, 2021, doi:10.1371/journal.pone.0248842.

[6] C. Solomon, M. F. Valstar, R. K. Morriss, and J. Crowe, 'Objective Methods for Reliable Detection of Concealed Depression', Front. ICT, vol. 2, 2015, Accessed: May 04, 2022. [Online].Available: https:/www.frontiersin.orgarticle/10.3389/fict.2015.00005

[7] 'Crema-D'. https://paperswithcode.com/dataset/crema-d

[8] S. R. Livingstone and F. A. Russo, 'The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English', PLOS ONE, vol. 13, no. 5, p. e0196391, May 2018, doi: 10.1371/journal.pone.0196391.

[9] A. Zadehet al., 'Multimodal language analysis in the wild: CMU-MOSEI dataset and interpretable dynamic fusion graph', in ACL 2018-56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), 2018, vol. 1. doi: 10.18653/v1/p18-1208.